Ethical Agentic AI: What Matters for Enterprise Trust & Adoption

Explore why ethical Agentic AI matters for enterprise trust, compliance, and sustainable scale before adoption risks turn into real-world setbacks.

Aishwarya K

8/3/20254 min read

Introduction

Deploying Agentic AI in your company today is akin to transitioning from a team of trained drivers to an invisible fleet of autonomous copilots.

They don’t just follow instructions; they make decisions and act. But if those copilots can’t explain their moves, obey boundaries, or align with your destination, you’re not scaling, you’re steering into fog.

For startup founders, CXOs, growth-stage leaders, and operational leaders, this shift is both powerful and risky. Agentic AI promises speed, but enterprise trust demands clarity, control, and accountability.

And here’s the catch: AI agents aren’t static tools. They’re decision-makers with autonomy. The old way of 'set it and forget it' no longer applies.

In this blog, we break down what makes Agentic AI ethical, enterprise-ready, and adoption-worthy. We also cover what concerns leaders must address early and how Elevin helps you build it right from day one.

Why Ethical Agentic AI Matters to Enterprises

Agentic AI shifts the AI conversation from automation to autonomy. These AI agents don’t just execute; they sense, decide, and adapt. But with independence comes complexity.

Key concerns from enterprise leaders include:

Opaque decision-making: Without visibility into agent reasoning, teams hesitate to rely on their outputs.

Security & compliance risks: Autonomous actions that touch customer data or integrate across systems must adhere to policies.

Brand safety & reputation: An unaligned AI agent making wrong or biased decisions can erode brand trust quickly.

Accountability gaps: If an agent takes the wrong action, who’s responsible—the dev team, vendor, or end-user?

These are not theoretical worries; they’re what stalls enterprise adoption.

According to McKinsey’s 2023 State of AI report, 56% of organizations cite ethical concerns as a top barrier to AI adoption, ranking even higher than technical challenges.

That’s why ethical frameworks are essential for trust, scaling, and long-term value.

And in heavily regulated industries, like finance, healthcare, and legal services, ethical AI deployment must also meet compliance mandates like:

GDPR (General Data Protection Regulation): Agents must respect user consent, the right to be forgotten, and the principles of data minimization.

HIPAA (Health Insurance Portability and Accountability Act): In healthcare, agents interacting with protected health information (PHI) must meet strict privacy and security standards.

SOC 2, ISO/IEC 27001, and PCI DSS: Security protocols around data access, system monitoring, and control automation must be embedded into agent behavior.

Without aligning with these, even a well-functioning agent becomes a liability.

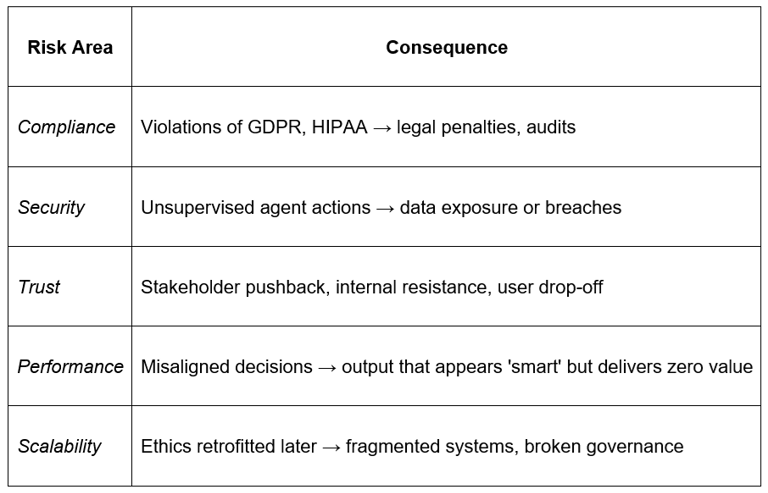

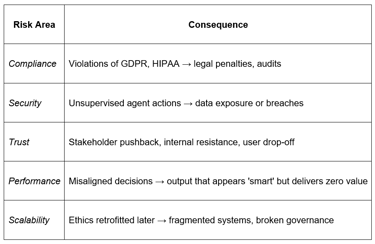

What Happens Without It?

Consider this:

An AI agent incorrectly flags transactions for fraud detection. Instead of alerting a human for review, it autonomously freezes accounts across systems. This cascades into customer dissatisfaction, legal scrutiny, and massive manual rollback efforts.

Another case? A customer service agent pulls sensitive medical data to resolve a support ticket, violating HIPAA and triggering fines.

In the enterprise, these aren’t bugs. They’re liabilities with bottom-line impact.

Skipping ethical foundations doesn’t just slow adoption. It introduces compounding risk:

The 5 Pillars of Ethical Agentic AI for Enterprises

Enterprise-grade AI needs more than performance. It needs trust. Based on Elevin’s work with early adopters, we define five core pillars that guide ethical and scalable Agentic AI deployment:

1. Transparent Reasoning

AI agents must provide traceable decision paths. This means:

Logging key inputs and the rationale for each decision

Offering human-readable explanations (“why this, not that”)

Enabling human audit trails in real-time and post-deployment

2. Value Alignment

Agents need boundaries that reflect your enterprise values:

No shortcuts that trade compliance for efficiency

No actions outside defined parameters, even if 'optimal'

Reward structures aligned with long-term KPIs, not short-term hacks

This avoids the classic reward-misalignment problem that caused systems like YouTube’s recommendation algorithm to spiral into controversy.

3. Embedded Governance

Agents should work within guardrails set by legal, compliance, and business stakeholders:

Automatic deactivation when encountering unfamiliar input types

Role-based access controls (RBAC)

Continuous compliance with GDPR, HIPAA, SOC 2, and ISO 27001

If governance isn’t baked into the agent’s core, you’re building risk into your ops stack.

4. Contextual Awareness

Context changes, and so must your agents. Ethical agents:

Learn from domain-specific constraints (e.g., B2B vs B2C)

React differently based on stakeholder intent and policy

Avoid 'one-size-fits-all' logic chains

Think of this like adaptive cruise control—it adjusts not just for speed, but for terrain, weather, and distance.

5. Human Control by Design

The most ethical Agentic AI still gives humans final say:

Soft overrides for human operators

Configurable autonomy levels (e.g., suggest-only, approve-required, auto-execute)

Slack-style updates and interventions

This creates a symbiosis where humans focus on judgment, and agents handle execution

How Elevin Helps Build Ethical Agentic AI

At Elevin, we work with businesses to design and deploy Agentic AI that’s not only capable, but compliant, controllable, and trustworthy.

Here’s how:

🔹 Role-Specific Agent Design

Each agent is purpose-built with clear boundaries: what it can access, act on, and escalate.

🔹 Audit-Friendly AgentOps Layer

We bake traceability into every action, so you know who (or what) did what, when, and why.

🔹 Bias Minimization Frameworks

Our process includes adversarial testing and fairness checks to mitigate harmful outcomes.

🔹 Privacy-First Architecture

From edge inference to data residency controls, we ensure your agents respect enterprise privacy mandates.

🔹 Trust Dashboards

Visualize agent behavior in real time. Spot anomalies, monitor decisions, and intervene when needed.

🔹 Ethical Drift Detection

We flag deviations in agent behavior that could signal unintended outcomes or evolving risks.

🔹 Integrated Governance Support

From documentation to regulatory mapping, we help teams meet compliance and audit needs with less friction.

According to PwC's 2024 US Responsible AI Survey, 46% organizations view Responsible AI as a way to achieve competitive differentiation.

Ethics isn't just a compliance checkbox. It’s a catalyst for enterprise AI adoption and long-term advantage.

Final Thoughts

Enterprises treating Agentic AI as "just another automation" will find themselves rebuilding systems they should have designed right the first time. Trust isn't a ‘nice-to-have’ feature. It's the foundation that determines whether your AI scales or fails.

The companies deploying ethical AI agents today aren't just avoiding tomorrow's crises. They're building sustainable competitive advantages while their competitors deal with algorithmic bias, compliance violations, and stakeholder backlash.

Just getting started with AI? We’ll help you design agents that are intelligent, ethical, and enterprise-ready from day one.

Already have AI systems running? Let's audit your current setup to identify risks and optimization opportunities before they become costly problems.

Let’s build AI that’s not just smart, but ethically sound from the ground up.

Excellence

Elevin Consulting: Your Partner in Growth.

Impact

© 2025 Elevin Consulting Pvt Ltd. All Rights Reserved

Trust

hello@elevinconsulting.com